Hot topic at the moment, if anyone’s been keeping up with agile metrics news, I’m sure you all have been.

But before we get into all that, let’s start with what’s a delivery metric and why should I care?

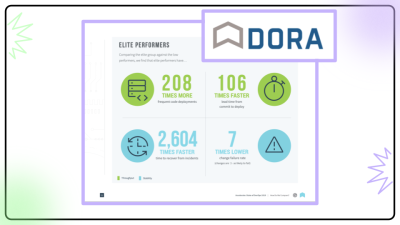

Given this is a devops conference there’s a good chance a lot of people here have heard of DORA metrics, you’ve probably also heard of sprint velocity, cycle time, pull request count, lines of code.. These are all metrics that can be used, with varying degrees of success, to measure the performance of an engineering team.

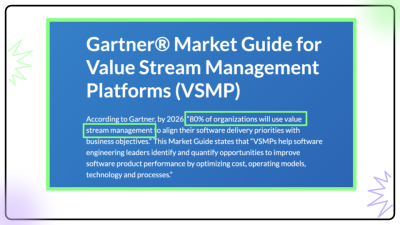

And why should you care about them? Well this partly depends on where you sit in your company’s org chart, but given that over $1b of VC funding has gone to metric tracking applications over the last couple of years, wherever you are there’s a good chance that even if you don’t care your boss does, or their boss, or the person in charge of the money does…

Now I’ve never been in charge of any money, and I’ve never been a C-level person, but I have been a developer for many years and was also a team lead for a couple of them so I can tell you why I cared and what metrics I think are good and bad and why. YMMV, of course.

Whatever position you are in, like most things the first question you need to answer before deciding what metrics or measurements you want to track is WHY you are doing this, what is it you want to achieve, what problems are you trying to solve?

As part of the dev team I was all about team efficiency, not for some greater good or anything, I am just a very easily distracted person so if a pipeline took too long or I had to wait for a PR to be reviewed I’d be off doing something else and you’ve lost me for an hour.

So measuring stuff like time between PR submitted and review started or how long unit tests take to run would be a useful measure. They highlight any impediments in your process. That could be tracked for a bit then discussed in a retro and the team could decide what, if anything, to do about it..

As a team lead I was more concerned about my team’s performance as a whole, partly so we knew where to focus our energies - like is the release process difficult and slow, and partly to justify our existence to the CTO (it was quite a dysfunctional company) - but quantifying the ROI of a software team is a question that comes up quite often.

For whole team performance you can’t go too wrong with the DORA metrics. Now I don’t have time to go into detail about these today but if you even have a passing interest in this stuff then go read about them. It’s based on proper scientific research by Google done over a number of years into what makes a high performing engineering team.

They found a combo of high speed and high stability was the answer - obvious really - and came up with 4 key metrics to measure those things. They are pretty standard these days and you can compare your numbers against Google’s data and see if you are doing alright or not (in their opinion…)

The most important feature of the metrics I use in both roles is that they were always team based. The ‘rockstar developer’ stereotype is dead, or at least should be, so measuring individual contribution in a team situation is a bad idea can only ever have a negative impact on that team.

The second most important feature is that none of these metrics were secret, how and why they’re calculated was always made clear, as were what the results were used for.

The very act of measuring something will change a person’s behaviour - if you know your deployment frequency is being tracked and you have a choice between doing a couple of small releases today or one big one tomorrow your decision will be influenced because you know someone’s watching.

There’s no metric in software that isn’t based on humans in some way, and anything based on people and their choices can and will be manipulated. A person in a position of authority can use this to incentivise the type of behaviour they want to see, but this is a dangerous path, as developers are a cynical bunch and if we feel we’re being played we will play you right back. You’ll suddenly find every ticket in a sprint is a 13 if you’ve decided we need to increase the number of storypoints we complete. And let’s not even talk about lines of code…

This is not exactly a call to arms. If you work for a company big enough and rich enough to use one of these massive management consultancies then one person rage-quitting over a dodgy graph is probably not going to make much difference. It’s more like, awareness.

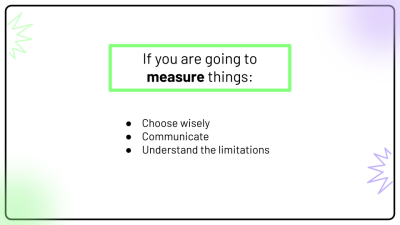

If you're going to measure things,choose wisely - do your metrics actually tell you what you think they are telling you? Communicate - if the people whose work you are measuring understand what you’re doing and why they are much more likely to help rather than undermine or fiddle the numbers. Understand the limitations - software delivery is not magic and it can be quantified… to a certain extent. Uncertainty is a huge part of our jobs, as is creativity and reducing us to number of tickets or resolution time is only part of the story.

Filler in case I bugger up the timings